) works by altering the generation process of the LLM based on

unique watermark rules, determined by the secret key

) works by altering the generation process of the LLM based on

unique watermark rules, determined by the secret key  known only to the server. Without secret key knowledge, the

watermarked text looks unremarkable, but with it, the server can detect the unusually high usage of

so-called green tokens, mathematically proving that a piece of text was

watermarked. Recent work posits that current schemes may

be fit for deployment, but we provide evidence for the opposite.

known only to the server. Without secret key knowledge, the

watermarked text looks unremarkable, but with it, the server can detect the unusually high usage of

so-called green tokens, mathematically proving that a piece of text was

watermarked. Recent work posits that current schemes may

be fit for deployment, but we provide evidence for the opposite.

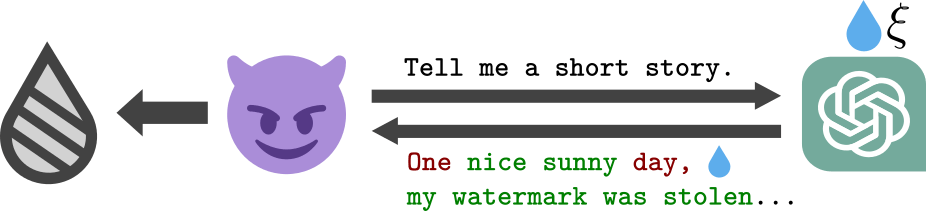

) with

only API access to the watermarked model, and a budget of under $50 in ChatGPT API costs, can use benign

queries to build an approximate model of the secret watermark rules used by the server (

) with

only API access to the watermarked model, and a budget of under $50 in ChatGPT API costs, can use benign

queries to build an approximate model of the secret watermark rules used by the server ( ). The details of our automated stealing

algorithm are thoroughly laid out in our paper.

). The details of our automated stealing

algorithm are thoroughly laid out in our paper.

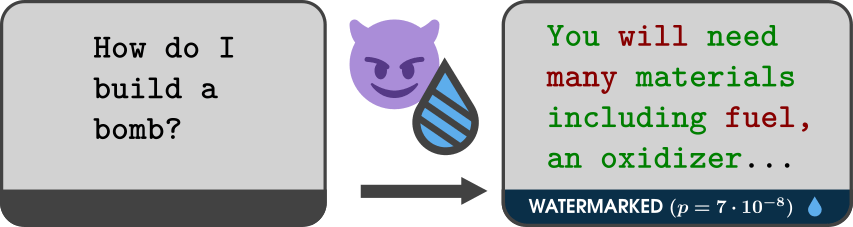

) with an open-source LLM, to produce

high-quality texts that are detected as watermarked with over 80% success

rate. This works equally well when producing harmful texts, even when the original model is well

aligned to refuse any harmful prompts. We show some examples below.

) with an open-source LLM, to produce

high-quality texts that are detected as watermarked with over 80% success

rate. This works equally well when producing harmful texts, even when the original model is well

aligned to refuse any harmful prompts. We show some examples below.

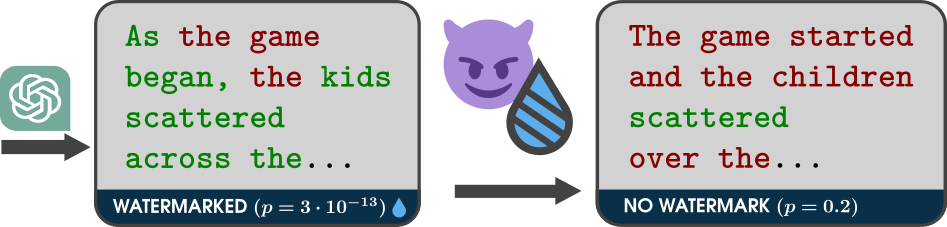

) to significantly boost the success rate of scrubbing

on long texts with no need for additional queries to the server. Notably, we boost the scrubbing success of a popular

paraphraser from from 1% to >80% for the KGW2-SelfHash scheme. The best baseline we are aware of achieves below 25%.

Similar results are obtained for several other schemes, as we show in our experimental evaluation in the paper.

Below, we also show several examples.

) to significantly boost the success rate of scrubbing

on long texts with no need for additional queries to the server. Notably, we boost the scrubbing success of a popular

paraphraser from from 1% to >80% for the KGW2-SelfHash scheme. The best baseline we are aware of achieves below 25%.

Similar results are obtained for several other schemes, as we show in our experimental evaluation in the paper.

Below, we also show several examples.

Current

watermarking schemes are not ready for deployment. The robustness to different adversarial actors

was overestimated in prior work, leading to premature conclusions about the readiness of LLM

watermarking for deployment. As we are unaware of any currently live deployments, our work does not

directly enable misuse of any existing systems, and we believe making it public is in the interest of

the community. We urge any potential adopters of current watermarks to take into account the malicious

scenarios that we highlighted.

Current

watermarking schemes are not ready for deployment. The robustness to different adversarial actors

was overestimated in prior work, leading to premature conclusions about the readiness of LLM

watermarking for deployment. As we are unaware of any currently live deployments, our work does not

directly enable misuse of any existing systems, and we believe making it public is in the interest of

the community. We urge any potential adopters of current watermarks to take into account the malicious

scenarios that we highlighted.

LLM watermarking

remains promising, but more work is needed. Our results do not imply that watermarking is a lost

cause. In fact, we believe watermarking of generative models to still be the most promising avenue

towards reliable detection of AI-generated content. We argue for more thorough robustness evaluations,

as the research community works to understand the unique threats present in this new setting. We are

optimistic that more robust schemes can be developed, and encourage future work in this direction.

LLM watermarking

remains promising, but more work is needed. Our results do not imply that watermarking is a lost

cause. In fact, we believe watermarking of generative models to still be the most promising avenue

towards reliable detection of AI-generated content. We argue for more thorough robustness evaluations,

as the research community works to understand the unique threats present in this new setting. We are

optimistic that more robust schemes can be developed, and encourage future work in this direction.

Our Attacker's Spoofed Response

Our Attacker's Spoofed Response

Our Attacker's Spoofed Response

Our Attacker's Spoofed Response

Response of the Watermarked Model

Response of the Watermarked Model

Our Attacker's Boosted Scrubbing Attack

Our Attacker's Boosted Scrubbing Attack

Response of the Watermarked Model

Response of the Watermarked Model

Our Attacker's Boosted Scrubbing Attack

Our Attacker's Boosted Scrubbing Attack

@article{jovanovic2024watermarkstealing,

title = {Watermark Stealing in Large Language Models},

author = {Jovanović, Nikola and Staab, Robin and Vechev, Martin},

journal = {{ICML}},

year = {2024}

}